Exam design has traditionally been a labor-intensive process, requiring alignment with learning outcomes, curriculum standards, and fairness principles. But with the surge of generative AI tools in 2025, educators and training professionals now have a powerful ally.

What if AI could help you create smarter, fairer, and more meaningful exams—without compromising academic integrity?

In this blog, we explore how generative AI (Gen AI) is reshaping exam creation, question variability, assessment alignment, and learner fairness. Whether you’re working in a university, professional training organization, or corporate L&D team, the insights here will help you improve both exam quality and learner performance.

Why Traditional Exam Design Falls Short

While traditional exam design methods have their strengths, they are often:

- Time-consuming: Writing well-balanced questions and rubrics can take weeks.

- Biased: Human-designed questions may reflect unconscious assumptions.

- Static: The same exam for all learners may not reflect individual progress.

- Misaligned: Questions often fail to directly test the intended learning outcomes.

Enter AI-enhanced exam design, which supports efficiency, consistency, and learner-centered testing—when used ethically and intentionally.

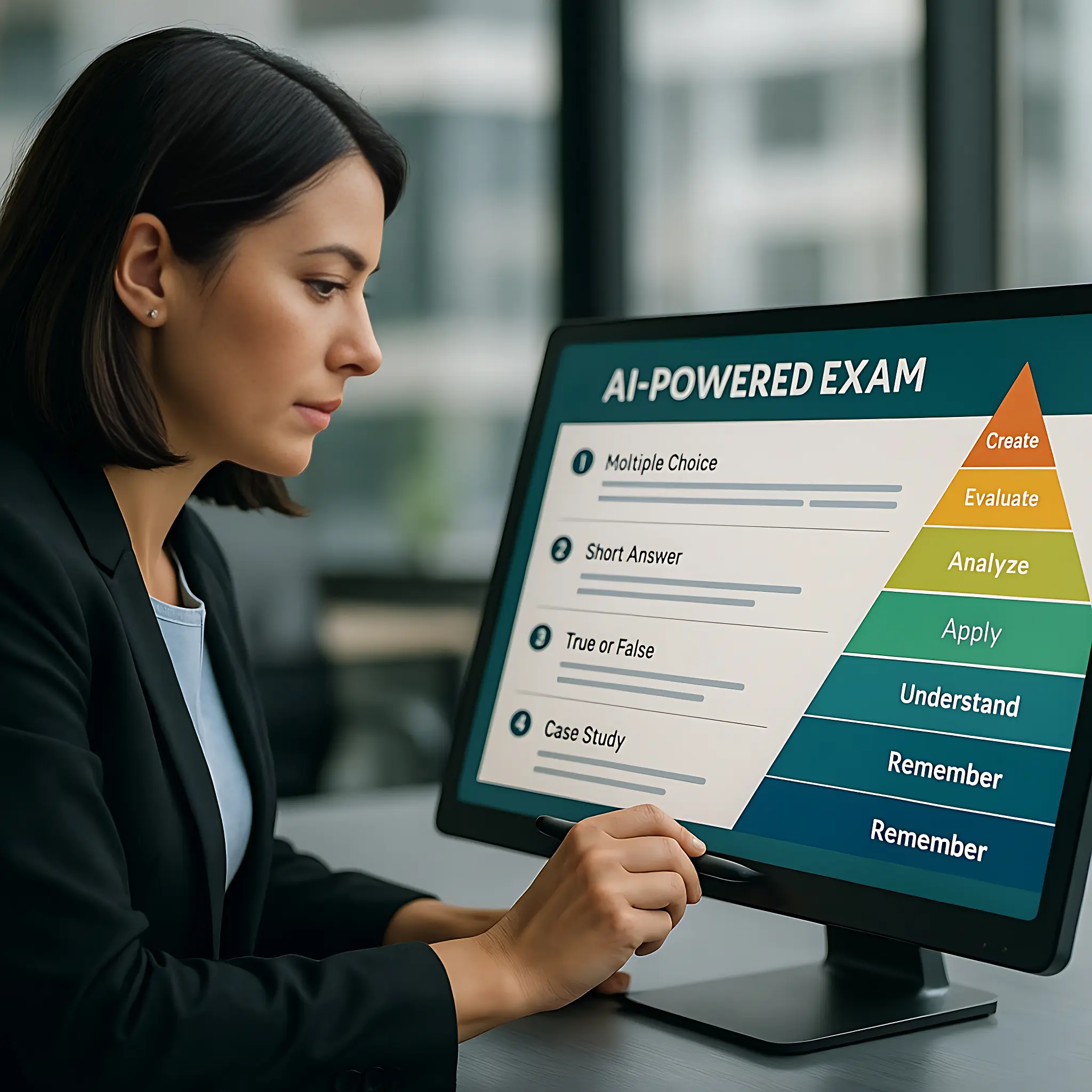

What AI Can Do in Exam Design

Here are five key ways AI is now improving exam design workflows:

| Function | AI Contribution |

|---|---|

| Question Generation | Instant creation of MCQs, case-based, or open-ended items |

| Bloom’s Taxonomy Alignment | Generates questions by cognitive level |

| Adaptive Assessment Design | Builds item banks with variable difficulty |

| Bias Detection | Reviews question language for cultural and gender bias |

| Feedback Personalisation | Provides tailored post-exam feedback based on performance |

Step-by-Step: Incorporating AI into Your Exam Design Process

Step 1: Define the Learning Outcomes

Before using any AI tool, ensure your course or module learning outcomes are:

- Clear

- Measurable

- Aligned with your curriculum or competency framework

AI can only generate good assessment items if the learning target is specific.

Example Learning Outcome:

“Apply project management principles to design a project timeline and risk plan.”

Step 2: Use Generative AI to Draft Initial Questions

Platforms like ChatGPT, Gemini, and Claude can generate question drafts. But prompts must be precise.

Prompt Example:

“Create 3 exam questions aligned with Bloom’s level 3 (Apply), based on this learning outcome: ‘Apply project management principles to design a project timeline.’ One MCQ, one short answer, one scenario-based.”

The result:

- Multiple-choice with plausible distractors

- Short-answer targeting application

- Real-world scenario for deeper evaluation

Step 3: Apply Bias and Language Filters

AI tools like Textio, Grammarly Premium, and custom-built models can help evaluate:

- Cultural bias

- Gender-coded language

- Accessibility of vocabulary (for EAL learners)

This makes your exam more inclusive and equitable.

Step 4: Align with Bloom’s Taxonomy or Competency Frameworks

Use tools like Bloom’s Wheel, RubricBuilder, or AI-based curriculum mapping tools to validate that the questions:

- Increase in difficulty logically

- Cover multiple cognitive levels

- Match your expected learner progression

This ensures a well-balanced, valid assessment.

Step 5: Generate Automated Marking Schemes and Feedback Templates

Once your AI-assisted exam is drafted, use the same model to generate:

- Marking rubrics (for essays or long answers)

- Answer keys (for MCQs or numerical questions)

- Automated feedback scripts (for LMS integration)

Example Feedback Prompt:

“Generate formative feedback for a student who scored 60% on an AI ethics case study exam. Highlight what was done well and 2 suggestions for improvement.”

This saves faculty hours of post-exam marking time and improves learner reflection.

Case Study: AI-Powered Exam Design at TheCaseHQ

Platform: TheCaseHQ.com

Context: Online certification programs in AI strategy, HR, and leadership

Problem: Manually designed exams were inconsistent across modules and lacked Bloom-level alignment.

Solution:

TheCaseHQ implemented a Gen AI-based workflow:

- Course leads submitted LOs and grading criteria

- AI generated initial MCQs, case-based questions, and rubrics

- SME review ensured quality, alignment, and fairness

- Post-exam feedback was generated automatically

Results:

- Design time dropped by 65%

- Pass rates improved

- Students reported greater confidence in assessment transparency

Benefits of AI in Exam Design

Scalability: Generate assessments across hundreds of learners or courses

Speed: Draft exams in hours, not weeks

Fairness: Bias detection improves inclusivity

Alignment: Matches learning outcomes precisely

Adaptivity: Supports individualised assessment paths

Feedback: Enhances post-exam learning through automation

Potential Risks and How to Manage Them

| Risk | Mitigation |

|---|---|

| Over-reliance on AI | Always review questions manually |

| Shallow questions | Use Bloom’s Taxonomy prompts to force deeper cognition |

| Data privacy | Avoid entering learner data into public AI tools |

| AI hallucinations | Cross-check for accuracy, especially technical content |

| Misalignment | Always validate questions against outcomes |

Ethical Considerations in AI-Based Exams

- Transparency: Let learners know AI was used to create or grade the exam

- Academic Integrity: Avoid question banks that resemble public AI datasets

- Data Control: Use secure AI systems with GDPR and FERPA compliance

- Inclusivity: Test across language levels and accessibility formats

Remember: AI should enhance fairness and learning—not automate bias or shortcuts.

Future Outlook: AI + Exams in 2026 and Beyond

What’s next?

- Machine-readable rubrics linked directly to blockchain credentials

- AI-generated alternate exam versions to prevent cheating

- Real-time adaptive testing with embedded feedback

- Generative simulations for experiential assessment (VR/AR + AI)

- Rubric to Result pipelines auto-linked with learner portfolios

AI isn’t just a tool—it’s becoming a co-designer of the learning experience.

Visit The Case HQ for 95+ courses

Read More:

How AI Is Transforming Executive Leadership in 2025

How Case Studies Build Strategic Thinking in Online Learning

From Learning to Leading: Using Case Studies in Executive Education

Best Practices for Integrating Case Studies in Online Courses

Case Method vs Project-Based Learning: What Works Better in 2025?

How to Upskill in AI Without a Technical Background

Why Microcredentials Are the Future of Professional Growth

Best Practices for Building AI-Supported Marking Schemes

Should Rubrics Be Machine-Interpretable? The Debate

Multi-Dimensional Rubrics Powered by AI Insights

Using Gen AI to Simplify Complex Rubrics

Aligning Bloom’s Taxonomy with AI Rubric Generators

https://thecasehq.com/incorporating-ai-into-exam-design-for-better-outcomes/?fsp_sid=4128

Comments

Post a Comment